简介

在Spark On K8s场景下, 涉及计算资源的设置, 除了driver.cores、 executor.cores、 driver.memory 和executor.memory这4个Spark自有的参数外, 还会受到K8s对资源控制的影响, 例如k8s request和limits。为此我们有必要了解在spark on ks的场景下,这些参数是否存在相互影响,以及在实际的应用中应该如何设置,才是最好的选择。

在spark on k8s场景下,可以通过两种方式设置,spark的资源参数,一个是在spark-submit提交程序的时候,通过–conf 设置资源参数,另外一种就是Pod Template中设置资源参数。

通过–conf配置

用例一:

| driver.cores | executor.cores | driver.request.cores | executor.request.cores | driver.limit.cores | executor.limit.cores |

|---|---|---|---|---|---|

| 1 | 1 | 1.5 | 1.5 | 2 | 2 |

driver.cores < driver.limit.cores driver可以正常启动运行

executor.cores < executor.limit.cores executor可以正常启动运行

用例二:

| driver.cores | executor.cores | driver.request.cores | executor.request.cores | driver.limit.cores | executor.limit.cores |

|---|---|---|---|---|---|

| 2 | 2 | 1.5 | 1.5 | 2 | 2 |

driver.cores = driver.limit.cores driver可以正常启动运行

executor.cores = executor.limit.cores executor可以正常启动运行

用例三:按理说是不能正常启动的因为需要的资源超过了限制资源

| driver.cores | executor.cores | driver.request.cores | executor.request.cores | driver.limit.cores | executor.limit.cores |

|---|---|---|---|---|---|

| 3 | 3 | 1.5 | 1.5 | 2 | 2 |

driver.cores > driver.limit.cores driver能启动运行

executor.cores > executor.limit.cores executor能启动运行

结论:根据实际情况来看,在–conf 配置方式下,K8S的request和limit资源参数实际上并不起作用,起作用的还是spark自有的driver和executor配置

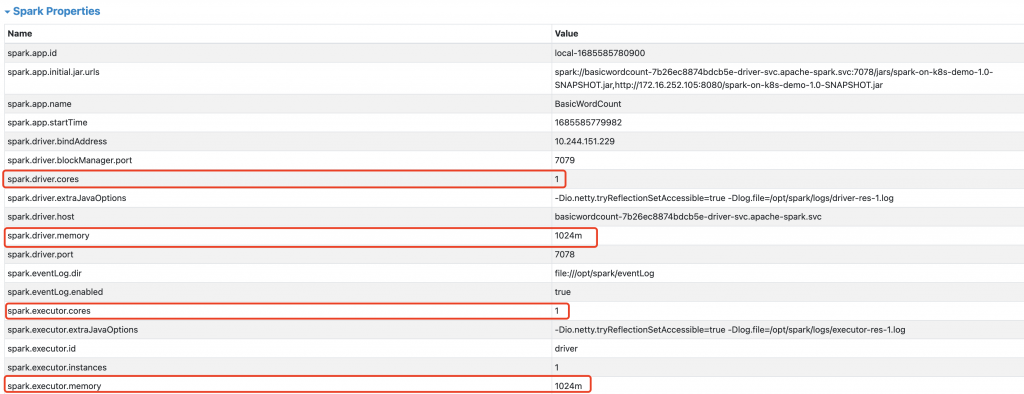

提交命令如下:

./spark-3.2.3/bin/spark-submit \ --name BasicWordCount \ --verbose \ --master k8s://https://172.16.252.105:6443 \ --deploy-mode cluster \ --conf spark.network.timeout=300 \ --conf spark.executor.instances=1 \ --conf spark.driver.cores=1 \ --conf spark.executor.cores=1 \ --conf spark.kubernetes.driver.request.cores=1.5 \ --conf spark.kubernetes.driver.limit.cores=2 \ --conf spark.kubernetes.executor.request.cores=1.5 \ --conf spark.kubernetes.executor.limit.cores=2 \ --conf spark.driver.memory=1024m \ --conf spark.executor.memory=1024m \ --conf spark.driver.extraJavaOptions="-Dio.netty.tryReflectionSetAccessible=true -Dlog.file=/opt/spark/logs/driver-res-1.log" \ --conf spark.executor.extraJavaOptions="-Dio.netty.tryReflectionSetAccessible=true -Dlog.file=/opt/spark/logs/executor-res-1.log" \ --conf spark.eventLog.enabled=true \ --conf spark.eventLog.dir=file:///opt/spark/eventLog \ --conf spark.history.fs.logDirectory=file:///opt/spark/eventLog \ --conf spark.kubernetes.namespace=apache-spark \ --conf spark.kubernetes.authenticate.driver.serviceAccountName=spark-service-account \ --conf spark.kubernetes.authenticate.executor.serviceAccountName=spark-service-account \ --conf spark.kubernetes.container.image.pullPolicy=IfNotPresent \ --conf spark.kubernetes.container.image=apache/spark:v3.2.3 \ --conf spark.kubernetes.driver.volumes.persistentVolumeClaim.spark-logs-pvc.mount.path=/opt/spark/logs \ --conf spark.kubernetes.driver.volumes.persistentVolumeClaim.spark-logs-pvc.options.claimName=spark-logs-pvc \ --conf spark.kubernetes.driver.volumes.persistentVolumeClaim.eventlog-pvc.mount.path=/opt/spark/eventLog \ --conf spark.kubernetes.driver.volumes.persistentVolumeClaim.eventlog-pvc.options.claimName=spark-historyserver-pvc \ --conf spark.kubernetes.executor.volumes.persistentVolumeClaim.spark-logs-pvc.mount.path=/opt/spark/logs \ --conf spark.kubernetes.executor.volumes.persistentVolumeClaim.spark-logs-pvc.options.claimName=spark-logs-pvc \ --conf spark.kubernetes.driverEnv.TZ=Asia/Shanghai \ --conf spark.kubernetes.executorEnv.TZ=Asia/Shanghai \ --class org.fblinux.StreamWordCount \ http://172.16.252.105:8080/spark-on-k8s-demo-1.0-SNAPSHOT.jar \ http://172.16.252.105:8080/wc_input.txt

通过Pod Template配置

用例一:

| driver.cores | executor.cores | driver.request.cores | executor.request.cores | driver.limit.cores | executor.limit.cores |

|---|---|---|---|---|---|

| 1 | 3 | 1.5 | 1.5 | 2 | 2 |

driver.cores < driver.limit.cores driver可以启动但会报错

executor.cores > executor.limit.cores executor不可以启动

用例二:

| driver.cores | executor.cores | driver.request.cores | executor.request.cores | driver.limit.cores | executor.limit.cores |

|---|---|---|---|---|---|

| 3 | 3 | 1.5 | 1.5 | 2 | 2 |

driver.cores > driver.limit.cores driver不可以启动

executor.cores > executor.limit.cores executor不可以启动

用例3:

| driver.memory | executor.memory | driver.request.memory | executor.request.memory | driver.limit.memory | executor.limit.memory |

|---|---|---|---|---|---|

| 4g | 4g | 1.5g | 1.5g | 2g | 2g |

driver.memory > driver.limit.memory driver可以启动

executor.memory < executor.limit.memory executor可以启动

结论:使用pod template可以限制cpu核数,但不能限制内存。

driver template文件:

[root@k8s-demo001 nginx_down]# cat driver-res.yaml

apiVersion: v1

kind: Pod

metadata:

labels:

app.kubernetes.io/name: apache-spark

app.kubernetes.io/instance: apache-spark

app.kubernetes.io/version: v3.2.3

namespace: apache-spark

name: driver

spec:

serviceAccountName: spark-service-account

hostAliases:

- ip: "172.16.252.105"

hostnames:

- "k8s-demo001"

- ip: "172.16.252.134"

hostnames:

- "k8s-demo002"

- ip: "172.16.252.135"

hostnames:

- "k8s-demo003"

- ip: "172.16.252.136"

hostnames:

- "k8s-demo004"

containers:

- image: apache/spark:v3.2.3

name: driver

imagePullPolicy: IfNotPresent

env:

- name: TZ

value: Asia/Shanghai

resources:

requests:

cpu: 1.5

memory: 1.5Gi

limits:

cpu: 2

memory: 2Gi

volumeMounts:

- name: spark-historyserver # 挂载eventLog归档目录

mountPath: /opt/spark/eventLog

- name: spark-logs # 挂载日志

mountPath: /opt/spark/logs

volumes:

- name: spark-historyserver

persistentVolumeClaim:

claimName: spark-historyserver-pvc

- name: spark-logs

persistentVolumeClaim:

claimName: spark-logs-pvc

executor template文件:

[root@k8s-demo001 nginx_down]# cat executor-res.yaml

apiVersion: v1

kind: Pod

metadata:

labels:

app.kubernetes.io/name: apache-spark

app.kubernetes.io/instance: apache-spark

app.kubernetes.io/version: v3.2.3

namespace: apache-spark

name: executor

spec:

serviceAccountName: spark-service-account

hostAliases:

- ip: "172.16.252.105"

hostnames:

- "k8s-demo001"

- ip: "172.16.252.134"

hostnames:

- "k8s-demo002"

- ip: "172.16.252.135"

hostnames:

- "k8s-demo003"

- ip: "172.16.252.136"

hostnames:

- "k8s-demo004"

containers:

- image: apache/spark:v3.2.3

name: executor

imagePullPolicy: IfNotPresent

env:

- name: TZ

value: Asia/Shanghai

resources:

requests:

cpu: 1.5

memory: 1.5Gi

limits:

cpu: 2

memory: 2Gi

volumeMounts:

- name: spark-historyserver # 挂载eventLog归档目录

mountPath: /opt/spark/eventLog

- name: spark-logs # 挂载日志

mountPath: /opt/spark/logs

volumes:

- name: spark-historyserver

persistentVolumeClaim:

claimName: spark-historyserver-pvc

- name: spark-logs

persistentVolumeClaim:

claimName: spark-logs-pvc

提交命令:

./spark-3.2.3/bin/spark-submit \ --name BasicWordCount \ --verbose \ --master k8s://https://172.16.252.105:6443 \ --deploy-mode cluster \ --conf spark.network.timeout=300 \ --conf spark.executor.instances=1 \ --conf spark.driver.cores=1 \ --conf spark.executor.cores=1 \ --conf spark.driver.memory=4g \ --conf spark.executor.memory=4g \ --conf spark.driver.extraJavaOptions="-Dio.netty.tryReflectionSetAccessible=true -Dlog.file=/opt/spark/logs/driver-res-6.log" \ --conf spark.executor.extraJavaOptions="-Dio.netty.tryReflectionSetAccessible=true -Dlog.file=/opt/spark/logs/executor-res-6.log" \ --conf spark.eventLog.enabled=true \ --conf spark.eventLog.dir=file:///opt/spark/eventLog \ --conf spark.history.fs.logDirectory=file:///opt/spark/eventLog \ --conf spark.kubernetes.namespace=apache-spark \ --conf spark.kubernetes.authenticate.driver.serviceAccountName=spark-service-account \ --conf spark.kubernetes.authenticate.executor.serviceAccountName=spark-service-account \ --conf spark.kubernetes.container.image.pullPolicy=IfNotPresent \ --conf spark.kubernetes.container.image=apache/spark:v3.2.3 \ --conf spark.kubernetes.driver.podTemplateFile=http://172.16.252.105:8080/driver-res.yaml \ --conf spark.kubernetes.executor.podTemplateFile=http://172.16.252.105:8080/executor-res.yaml \ --class org.fblinux.BasicWordCount \ http://172.16.252.105:8080/spark-on-k8s-demo-1.0-SNAPSHOT.jar \ http://172.16.252.105:8080/wc_input.txt

结论

在实际应用中,CPU配置方面,建议将spark.driver.cores和spark.executor.cores与pod template的limits.cores配置一致,如果不使用pod template,只需要配置spark.driver.cores和spark.executor.cores即可,不需要配置其他参数,不然不光不起作用,放进去还会引起误解 内存方面:无论是方式一还是方式二,对内存的限制都不起作用,实际使用中,将spark.driver.memory和spark.executor.memory与pod template的limits.memory配置一致即可,或者只配置spark.driver.memory和spark.executor.memory

转载请注明:西门飞冰的博客 » (5)Spark on K8S 计算资源配置