1.简介

在之前的案例中,spark 作业退出之后,所有的内容都销毁了,如果要对之前运行的程序进行调试和优化,是没有办法查询的,这个时候就有必要部署Spark History Server了。Spark History Server是一个非常重要的工具,可以帮助用户管理和监控 Spark 应用程序的执行情况,提高应用程序的执行效率和性能。可以保存 Spark 应用程序的历史记录,即使应用程序已经结束,用户仍然可以查看和分析历史记录,从而更好地了解应用程序的执行情况和性能指标。

2.History Server部署

1、创建spark historyserver pvc,保存Spark程序的eventLog归档数据。

[root@k8s-demo001 ~]# cat spark-historyserver-pvc.yaml

#spark historyserver 持久化存储pvc

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: spark-historyserver-pvc # spark historyserver pvc名称

namespace: apache-spark # 指定归属的名命空间

spec:

storageClassName: nfs-storage #sc名称,更改为实际的sc名称

accessModes:

- ReadWriteMany #采用ReadWriteMany的访问模式

resources:

requests:

storage: 1Gi #存储容量,根据实际需要更改

[root@k8s-demo001 ~]# kubectl apply -f spark-historyserver-pvc.yaml

2、编写 historyserver configmap,保存eventLog相关配置信息

[root@k8s-demo001 ~]# cat spark-historyserver-conf.yaml

kind: ConfigMap

apiVersion: v1

metadata:

name: spark-historyserver-conf

namespace: apache-spark

annotations:

kubesphere.io/creator: admin

data:

spark-defaults.conf: |

spark.eventLog.enabled true

spark.eventLog.compress true

spark.eventLog.dir file:///opt/spark/eventLog

spark.yarn.historyServer.address localhost:18080

spark.history.ui.port 18080

spark.history.fs.logDirectory file:///opt/spark/eventLog

[root@k8s-demo001 ~]# kubectl apply -f spark-historyserver-conf.yaml

3、部署historyserver,采用Deployment + Service + Ingress方式

[root@k8s-demo001 ~]# cat spark-historyserver.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

namespace: apache-spark

labels:

app: spark-historyserver

name: spark-historyserver

name: spark-historyserver

spec:

replicas: 1

selector:

matchLabels:

name: spark-historyserver

template:

metadata:

namespace: apache-spark

labels:

app: spark-historyserver

name: spark-historyserver

spec:

containers:

- name: spark-historyserver

image: apache/spark:v3.2.3

imagePullPolicy: IfNotPresent

args: ["/opt/spark/bin/spark-class", "org.apache.spark.deploy.history.HistoryServer"]

env:

- name: TZ

value: Asia/Shanghai

- name: HADOOP_USER_NAME

value: root

- name: SPARK_USER

value: root

# 如果不使用configmap,则通过SPARK_HISTORY_OPTS配置

# - name: SPARK_HISTORY_OPTS

# value: "-Dspark.eventLog.enabled=true -Dspark.eventLog.dir=file:///opt/spark/eventLog -Dspark.history.fs.logDirectory=file:///opt/spark/eventLog"

ports:

- containerPort: 18080

volumeMounts:

- name: spark-conf # 挂载history server配置信息

mountPath: /opt/spark/conf/spark-defaults.conf

subPath: spark-defaults.conf

- name: spark-historyserver # 挂载eventLog归档目录

mountPath: /opt/spark/eventLog

volumes: # 挂载卷配置

- name: spark-conf

configMap:

name: spark-historyserver-conf

- name: spark-historyserver

persistentVolumeClaim:

claimName: spark-historyserver-pvc

#---

#kind: Service

#apiVersion: v1

#metadata:

# namespace: apache-spark

# name: spark-historyserver

#spec:

# type: NodePort

# ports:

# - port: 18080

# nodePort: 31082

# selector:

# name: spark-historyserver

# ingress按实际情况配置

---

apiVersion: v1

kind: Service

metadata:

labels:

app: spark-historyserver

name: spark-historyserver

name: spark-historyserver

namespace: apache-spark

spec:

selector:

app: spark-historyserver

ports:

- port: 18080

protocol: TCP

targetPort: 18080

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

namespace: apache-spark

name: spark-historyserver

annotations:

nginx.ingress.kubernetes.io/default-backend: ingress-nginx-controller

nginx.ingress.kubernetes.io/use-regex: 'true'

spec:

ingressClassName: nginx

rules:

- host: "spark.k8s.io"

http:

paths:

- pathType: Prefix

path: "/"

backend:

service:

name: spark-historyserver

port:

number: 18080

[root@k8s-demo001 ~]# kubectl apply -f spark-historyserver.yaml

4、在hosts里添加域名和IP映射,其中IP是Ingress Pod所在k8s node节点的IP

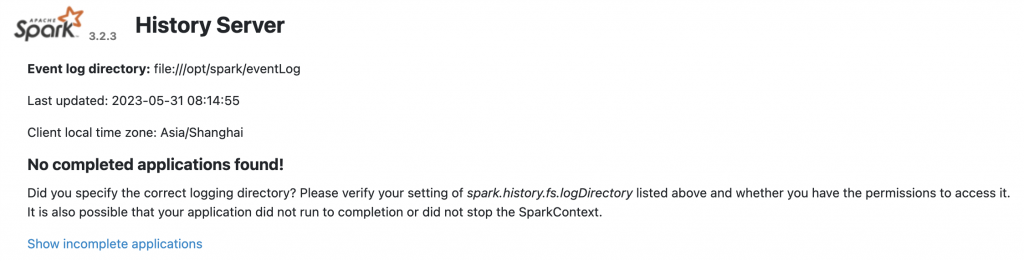

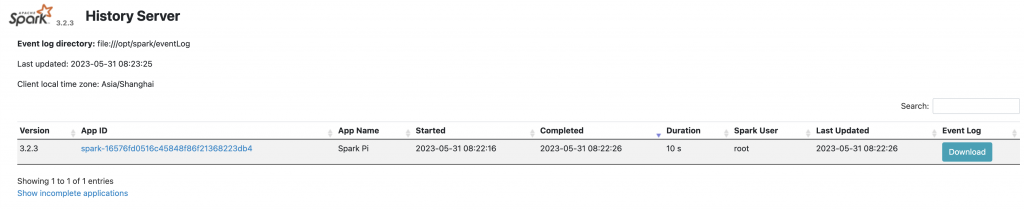

5、访问验证

3.History Server 使用测试

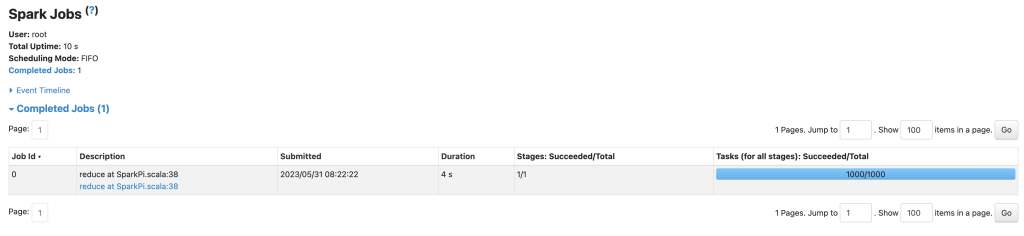

1、使用SparkPi测试

./spark-3.2.3/bin/spark-submit \ --name SparkPi \ --verbose \ --master k8s://https://172.16.252.105:6443 \ --deploy-mode cluster \ --conf spark.network.timeout=300 \ --conf spark.executor.instances=3 \ --conf spark.driver.cores=1 \ --conf spark.executor.cores=1 \ --conf spark.driver.memory=1024m \ --conf spark.executor.memory=1024m \ --conf spark.driver.extraJavaOptions="-Dio.netty.tryReflectionSetAccessible=true -Dlog.file=/opt/spark/logs/pi-wc.log" \ --conf spark.executor.extraJavaOptions="-Dio.netty.tryReflectionSetAccessible=true -Dlog.file=/opt/spark/logs/pi-wc.log" \ --conf spark.eventLog.enabled=true \ --conf spark.eventLog.dir=file:///opt/spark/eventLog \ --conf spark.history.fs.logDirectory=file:///opt/spark/eventLog \ --conf spark.kubernetes.namespace=apache-spark \ --conf spark.kubernetes.authenticate.driver.serviceAccountName=spark-service-account \ --conf spark.kubernetes.authenticate.executor.serviceAccountName=spark-service-account \ --conf spark.kubernetes.container.image.pullPolicy=IfNotPresent \ --conf spark.kubernetes.container.image=apache/spark:v3.2.3 \ --conf spark.kubernetes.driver.volumes.persistentVolumeClaim.spark-logs-pvc.mount.path=/opt/spark/logs \ --conf spark.kubernetes.driver.volumes.persistentVolumeClaim.spark-logs-pvc.options.claimName=spark-logs-pvc \ --conf spark.kubernetes.driver.volumes.persistentVolumeClaim.eventlog-pvc.mount.path=/opt/spark/eventLog \ --conf spark.kubernetes.driver.volumes.persistentVolumeClaim.eventlog-pvc.options.claimName=spark-historyserver-pvc \ --conf spark.kubernetes.executor.volumes.persistentVolumeClaim.spark-logs-pvc.mount.path=/opt/spark/logs \ --conf spark.kubernetes.executor.volumes.persistentVolumeClaim.spark-logs-pvc.options.claimName=spark-logs-pvc \ --conf spark.kubernetes.driverEnv.TZ=Asia/Shanghai \ --conf spark.kubernetes.executorEnv.TZ=Asia/Shanghai \ --class org.apache.spark.examples.SparkPi \ local:///opt/spark/examples/jars/spark-examples_2.12-3.2.3.jar \ 1000