前言

本文主要介绍如果通过openresty+lua实现一个前端埋点服务,实现功能如下:

(1)用户上传数据实现简单的鉴权

(2)允许跨域请求

(3)获取用户上传的body内容和部分header头,拼接成最终完成的埋点信息,发送给Kafka

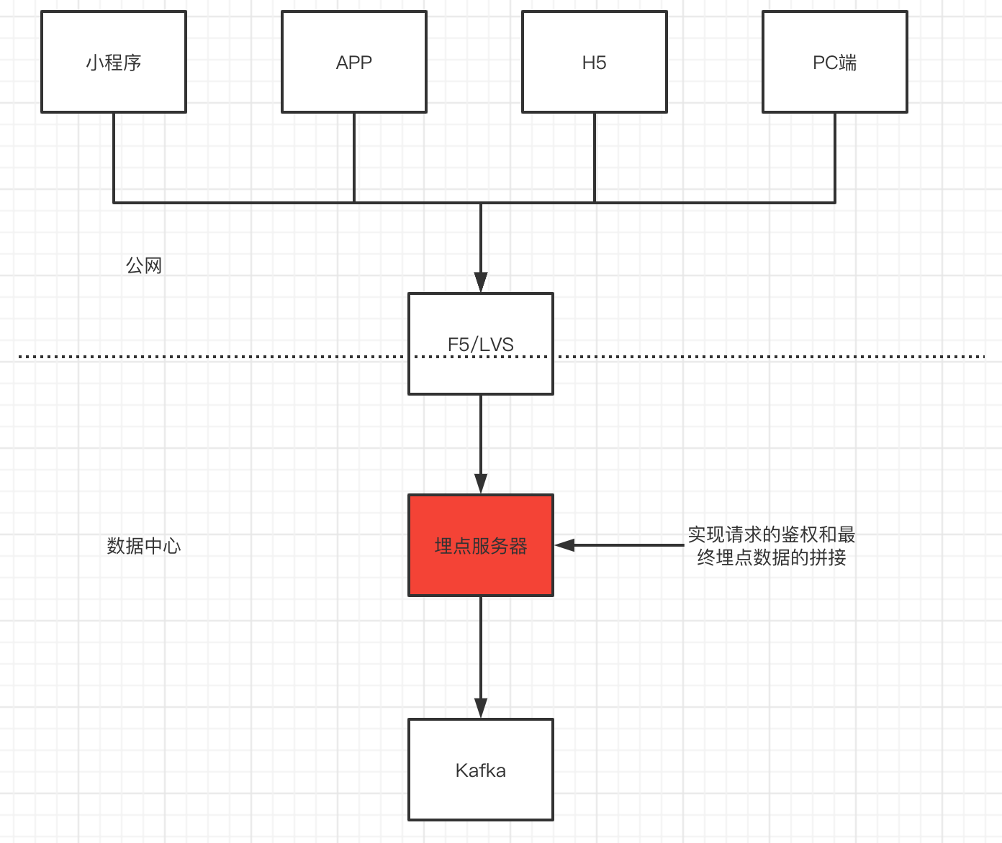

架构图如下:

配置

(1)编译安装openresty

wget https://openresty.org/download/openresty-1.19.9.1.tar.gz tar xf openresty-1.19.9.1.tar.gz cd openresty-1.19.9.1/ ./configure --prefix=/opt/openresty --with-luajit --without-http_redis2_module --with-http_iconv_module make && make install

(2)安装lua-resty-kafka

wget https://github.com/doujiang24/lua-resty-kafka/archive/master.zip unzip master.zip cp -rf /opt/lua-resty-kafka-master/lib/resty/kafka/ /opt/openresty/lualib/resty/

(3)编辑nginx 配置文件

cat conf/nginx.conf

user nginx;

worker_processes auto;

worker_rlimit_nofile 100000;

#error_log logs/error.log;

#error_log logs/error.log notice;

#error_log logs/error.log info;

#pid logs/nginx.pid;

events {

worker_connections 102400;

multi_accept on;

use epoll;

}

http {

include mime.types;

default_type application/octet-stream;

log_format main '$remote_addr - $remote_user [$time_local] "$request" '

'$status $body_bytes_sent "$http_referer" '

'"$http_user_agent" "$http_x_forwarded_for"';

access_log logs/access.log main;

resolver 10.20.250.188;

sendfile on;

#tcp_nopush on;

#keepalive_timeout 0;

keepalive_timeout 65;

underscores_in_headers on;

gzip on;

server {

listen 80;

server_name 10.26.1.21;

root html;

lua_need_request_body on;

access_log /var/log/nginx/message.access.log main;

error_log /var/log/nginx/message.error.log notice;

location = /log {

# 跨域配置

add_header Access-Control-Allow-Origin *;

add_header Access-Control-Allow-Methods 'GET, POST, OPTIONS';

add_header Access-Control-Allow-Headers 'DNT,X-Mx-ReqToken,Keep-Alive,User-Agent,X-Requested-With,If-Modified-Since,Cache-Control,Content-Type,Authorization,sq-bp';

if ($request_method = 'OPTIONS') {

return 204;

}

client_body_buffer_size 500k;

client_max_body_size 5m;

lua_code_cache on;

charset utf-8;

default_type 'application/json';

# 调用的lua脚本

content_by_lua_file "/opt/openresty/nginx/lua/testMessage_kafka.lua";

}

}

}

(4)编辑lua 配置文件

# cat /opt/openresty/nginx/lua/testMessage_kafka.lua

local producer = require("resty.kafka.producer")

local broker_list = {

{host = "Kafka 服务器地址-01", port = 9092},

{host = "Kafka 服务器地址-02", port = 9092},

{host = "Kafka 服务器地址-03", port = 9092},

}

local sqbp = ngx.req.get_headers()["自定义校验header头"]

if 自定义校验header头 == "自定义校验header值" then

# 获取客户端的header信息合并到埋点内容中

local headers=ngx.req.get_headers()

local ip=headers["X-REAL-IP"] or headers["X_FORWARDED_FOR"] or ngx.var.remote_addr or "0.0.0.0"

local ua=headers["user-agent"]

local Origin=headers["Origin"]

local date=os.date("%Y-%m-%d %H:%M:%S")

# 获取用户上传的body并和header一起拼接成json

local log_json = {}

log_json["body"] = ngx.req.read_body()

log_json["body_data"] = ngx.req.get_body_data()

log_json["cip"] = ip

log_json["user-agent"] = ua

log_json["origin"] = Origin

log_json["log_timestamp"] = date

local bp = producer:new(broker_list, { producer_type = "async",batch_num = 1 })

local cjson = require("cjson.safe")

local sendMsg = cjson.encode(log_json)

local ok, err = bp:send("Kafka 返回值",nil, sendMsg)

if not ok then

ngx.log(ngx.ERR, 'kafka send err:', err)

elseif ok then

ngx.say("{\"result\": 200,\"message\": \"发送成功\"}")

else

ngx.say("{\"result\": 101,\"message\": \"发送失败,服务器异常\"}")

end

else

ngx.say("{\"result\": 101,\"message\": \"发送失败,参数错误\"}")

end

启动服务运行

#创建用户 useradd nginx #设置openresty的所有者nginx chown -R nginx:nginx /opt/openresty/ # 创建日志目录并授权 mkdir /var/log/nginx/ chown -R nginx:nginx /var/log/nginx/ #启动服务 cd /opt/openresty/nginx/sbin ./nginx -c /opt/openresty/nginx/conf/nginx.conf

使用postman 发送post请求进行简单的测试,查看Kafka 能否收到数据,如果Kafka 可以正常收到数据,说明配置一切正常

转载请注明:西门飞冰的博客 » openresty收集埋点日志