1.MHA介绍

MHA(Master High Availability)目前在MySQL高可用方面是一个相对成熟的解决方案,它由日本DeNA公司youshimaton(现就职于Facebook公司)开发,是一套优秀的作为MySQL高可用性环境下故障切换和主从提升的高可用软件。在MySQL故障切换过程中,MHA能做到在0~30秒之内自动完成数据库的故障切换操作,并且在进行故障切换的过程中,MHA能在最大程度上保证数据的一致性,以达到真正意义上的高可用。

2.MHA架构

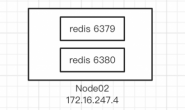

该软件由两部分组成:MHA Manager(管理节点)和MHA Node(数据节点)。MHA Manager可以单独部署在一台独立的机器上管理多个master-slave集群,也可以部署在一台slave节点上。MHA Node运行在每台MySQL服务器上,MHA Manager会定时探测集群中的master节点,当master出现故障时,它可以自动将最新数据的slave提升为新的master,然后将所有其他的slave重新指向新的master。整个故障转移过程对应用程序完全透明。

在MHA自动故障切换过程中,MHA试图从宕机的主服务器上保存二进制日志,最大程度的保证数据的不丢失(配合mysql半同步复制效果更佳),但这并不总是可行的。例如,如果主服务器硬件故障或无法通过ssh访问,MHA没法保存二进制日志,只进行故障转移而丢失了最新的数据。使用MySQL 5.5的半同步复制,可以大大降低数据丢失的风险。MHA可以与半同步复制结合起来。如果只有一个slave已经收到了最新的二进制日志,MHA可以将最新的二进制日志应用于其他所有的slave服务器上,因此可以保证所有节点的数据一致性。

注意:目前MHA主要支持一主多从的架构,要搭建MHA,要求一个复制集群中必须最少有三台数据库服务器,一主二从,即一台充当master,一台充当备用master,另外一台充当从库,因为至少需要三台服务器,出于机器成本的考虑,淘宝也在该基础上进行了改造,目前淘宝TMHA已经支持一主一从。

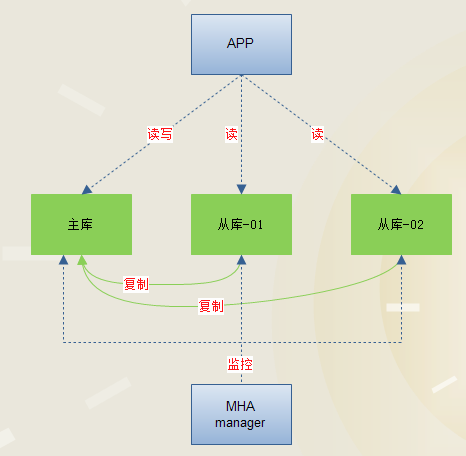

2.1.MHA架构图

正常工作时架构图:

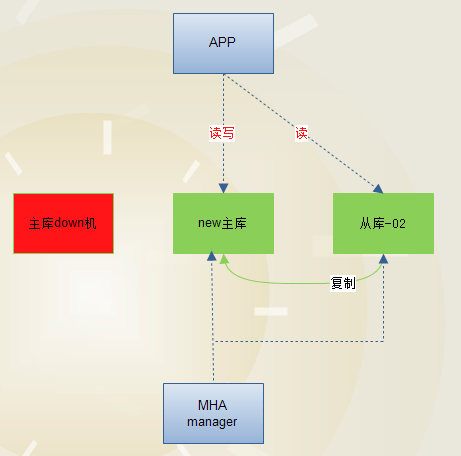

主库down机时架构:

2.2.故障故障转移过程

(1)从宕机崩溃的master保存二进制日志事件(binlog events);

(2)识别含有最新更新的slave;

(3)应用差异的中继日志(relay log)到其他的slave;

(4)应用从master保存的二进制日志事件(binlog events);

(5)提升一个slave为新的master;

(6)使其他的slave连接新的master进行复制;

(7)在新的master启动vip地址,保证前端请求可以发送到新的master。

3.搭建环境介绍

3.1.服务器信息

| 主机名 | IP | 操作系统 | 服务器角色 |

| node1.fblinux.com | 192.168.100.201 | Centos 6.8 | 主库 |

| node2.fblinux.com | 192.168.100.202 | Centos 6.8 | 从库\备库 |

| node3.fblinux.com | 192.168.100.203 | Centos 6.8 | 从库 |

| node4.fblinux.com | 192.168.100.204 | Centos 6.8 | MHA manager |

3.2.准备工作

(1)所有节点需要配置ssh免密钥

(2)所有节点时间同步

(3)关闭防火墙和selinux

4.Mysql主从同步配置

4.1.Mysql安装

yum -y install mysql mysql-server

4.2.配置文件设置

主配置文件设置

server-id=1 log-bin=mysql-logbin

从配置文件设置(注意:不同从库server-id需要配置不同)

log-bin=mysql-logbin relay-log = relay-bin server-id = 2 slave-skip-errors = all relay_log_purge = 0

4.3.终端配置

(1)主库创建复制用户

grant replication client,replication slave on *.* to 'repluser'@'192.168.100.%' identified by'replpass'; FLUSH PRIVILEGES;

(2)从库配置

查看主机二进制日志当前记录位置

mysql> show master status; +---------------------+----------+--------------+------------------+ | File | Position | Binlog_Do_DB | Binlog_Ignore_DB | +---------------------+----------+--------------+------------------+ | mysql-logbin.000003 | 359 | | | +---------------------+----------+--------------+------------------+ 1 row in set (0.00 sec)

Master信息配置:

mysql> CHANGE MASTER TO MASTER_HOST='192.168.100.201',MASTER_USER='repluser',MASTER_PASSWORD='replpass',MASTER_LOG_FILE='mysql-logbin.000003',MASTER_LOG_POS=359;

Query OK, 0 rows affected (0.03 sec)

mysql> start slave;

Query OK, 0 rows affected (0.00 sec)

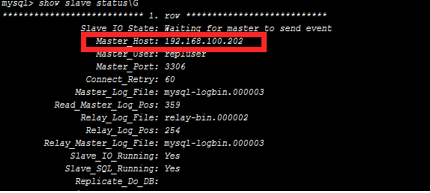

mysql> show slave status\G

*************************** 1. row ***************************

Slave_IO_State: Waiting for master to send event

Master_Host: 192.168.100.201

Master_User: repluser

Master_Port: 3306

Connect_Retry: 60

Master_Log_File: mysql-logbin.000003

Read_Master_Log_Pos: 359

Relay_Log_File: relay-bin.000002

Relay_Log_Pos: 254

Relay_Master_Log_File: mysql-logbin.000003

Slave_IO_Running: Yes

Slave_SQL_Running: Yes

Replicate_Do_DB:

Replicate_Ignore_DB:

Replicate_Do_Table:

Replicate_Ignore_Table:

Replicate_Wild_Do_Table:

Replicate_Wild_Ignore_Table:

Last_Errno: 0

Last_Error:

Skip_Counter: 0

Exec_Master_Log_Pos: 359

Relay_Log_Space: 403

Until_Condition: None

Until_Log_File:

Until_Log_Pos: 0

Master_SSL_Allowed: No

Master_SSL_CA_File:

Master_SSL_CA_Path:

Master_SSL_Cert:

Master_SSL_Cipher:

Master_SSL_Key:

Seconds_Behind_Master: 0

Master_SSL_Verify_Server_Cert: No

Last_IO_Errno: 0

Last_IO_Error:

Last_SQL_Errno: 0

Last_SQL_Error:

1 row in set (0.00 sec)

5.MHA配置

5.1.环境安装

软件下载:https://downloads.mariadb.com/MHA/

(1)所有节点安装mha4mysql-node

yum install -y perl-DBD-MySQL rpm -ivh mha4mysql-node-0.54-0.el6.noarch.rpm

(2)管理节点安装

yum -y install mha4mysql-manager-0.55-0.el6.noarch.rpm

5.2.数据库帐号配置

(1)创建mha管理帐号

mysql> grant all privileges on *.* TO mha@'192.168.%' IDENTIFIED BY 'test'; mysql> flush privileges;

(2)在从库创建复制权限用户,用户名和密码需要和主库保持一致。

mysql> grant replication client,replication slave on *.* to 'repluser'@'192.168.100.%' identified by'replpass'; mysql> FLUSH PRIVILEGES;

5.3.配置文件设置

[root@node4 ~]# mkdir /etc/mha [root@node4 ~]# mkdir /var/log/mha/app1/ -p [root@node4 ~]# vim /etc/mha/app1.conf [server default] # 设置manager的日志 manager_log=/var/log/mha/app1/manager.log # 设置manager的工作目录 manager_workdir=/var/log/mha/app1 # 设置自动故障转移时的切换脚本 master_ip_failover_script=/data/perl/master_ip_failover # 设置手动故障转移时的切换脚本 master_ip_online_change_script=/data/perl/master_ip_failover # 设置mysql中 manager用户名和密码 user=mha password=test # 设置监控主库,发送ping包的时间间隔,默认是3秒,尝试三次没有回应的时候自动进行故障转移 ping_interval=1 # 设置远程mysql在发生切换时binlog的保存位置 remote_workdir=/var/log/mha/app1 # 设置复制用户名密码 repl_password=replpass repl_user=repluser # 设置发生切换后发送报警的脚本 report_script=/data/perl/send_report # 设置ssh的登录用户名 ssh_user=root [server01] hostname=192.168.100.201 port=3306 master_binlog_dir=/var/lib/mysql candidate_master=1 # 设置为候选master,如果设置该参数以后,发生主从切换以后会将此从库提升为主库,即使这个主库不是集群中事件最新的slave check_repl_delay=0 # 默认情况下如果一个slave落后master 100M的relay logs的话,MHA将不会选择该slave作为一个新的master,因为对于这个slave的恢复需要花费很长时间,通过设置check_repl_delay=0,MHA触发切换在选择一个新的master的时候将会忽略复制延时,这个参数对于设置了candidate_master=1的主机非常有用,因为这个候选主在切换的过程中一定是新的master [server02] candidate_master=1 check_repl_delay=0 hostname=192.168.100.202 master_binlog_dir=/var/lib/mysql port=3306 [server03] hostname=192.168.100.203 ignore_fail=1ignore_fail=1 master_binlog_dir=/var/lib/mysql no_master=1 port=3306

主从状态VIP切换脚本(只需修改VIP地址即可)

[root@node4 ~]# cat /data/perl/master_ip_failover

#!/usr/bin/env perl

use strict;

use warnings FATAL => 'all';

use Getopt::Long;

my (

$command, $ssh_user, $orig_master_host, $orig_master_ip,

$orig_master_port, $new_master_host, $new_master_ip, $new_master_port

);

my $vip = '192.168.100.201/24'; # Virtual IP #设置VIP地址

my $key = "1";

my $ssh_start_vip = "/sbin/ifconfig eth0:$key $vip";

my $ssh_stop_vip = "/sbin/ifconfig eth0:$key down";

$ssh_user = "root";

GetOptions(

'command=s' => \$command,

'ssh_user=s' => \$ssh_user,

'orig_master_host=s' => \$orig_master_host,

'orig_master_ip=s' => \$orig_master_ip,

'orig_master_port=i' => \$orig_master_port,

'new_master_host=s' => \$new_master_host,

'new_master_ip=s' => \$new_master_ip,

'new_master_port=i' => \$new_master_port,

);

exit &main();

sub main {

print "\n\nIN SCRIPT TEST====$ssh_stop_vip==$ssh_start_vip===\n\n";

if ( $command eq "stop" || $command eq "stopssh" ) {

# $orig_master_host, $orig_master_ip, $orig_master_port are passed.

# If you manage master ip address at global catalog database,

# invalidate orig_master_ip here.

my $exit_code = 1;

eval {

print "Disabling the VIP on old master: $orig_master_host \n";

&stop_vip();

$exit_code = 0;

};

if ($@) {

warn "Got Error: $@\n";

exit $exit_code;

}

exit $exit_code;

}

elsif ( $command eq "start" ) {

# all arguments are passed.

# If you manage master ip address at global catalog database,

# activate new_master_ip here.

# You can also grant write access (create user, set read_only=0, etc) here.

my $exit_code = 10;

eval {

print "Enabling the VIP - $vip on the new master - $new_master_host \n";

&start_vip();

$exit_code = 0;

};

if ($@) {

warn $@;

exit $exit_code;

}

exit $exit_code;

}

elsif ( $command eq "status" ) {

print "Checking the Status of the script.. OK \n";

`ssh $ssh_user\@cluster1 \" $ssh_start_vip \"`;

exit 0;

}

else {

&usage();

exit 1;

}

}

# A simple system call that enable the VIP on the new master

sub start_vip() {

`ssh $ssh_user\@$new_master_host \" $ssh_start_vip \"`;

}

# A simple system call that disable the VIP on the old_master

sub stop_vip() {

`ssh $ssh_user\@$orig_master_host \" $ssh_stop_vip \"`;

}

sub usage {

print

"Usage: master_ip_failover --command=start|stop|stopssh|status --orig_master_host=host --orig_master_ip=ip --orig_master_port=port --new_master_host=host --new_master_ip=ip --new_master_port=port\n";

}

主从状态切换报警脚本(需要填写自己公司邮箱信息)

[root@node4 ~]# cat /data/perl/send_report

#!/usr/bin/perl

use strict;

use warnings FATAL => 'all';

use Mail::Sender;

use Getopt::Long;

#new_master_host and new_slave_hosts are set only when recovering master succeeded

my ( $dead_master_host, $new_master_host, $new_slave_hosts, $subject, $body );

my $smtp='smtp服务器地址';

my $mail_from='发件人邮箱';

my $mail_user='邮箱登陆用户名';

my $mail_pass='邮箱登陆密码';

my $mail_to=['收件人地址'];

GetOptions(

'orig_master_host=s' => \$dead_master_host,

'new_master_host=s' => \$new_master_host,

'new_slave_hosts=s' => \$new_slave_hosts,

'subject=s' => \$subject,

'body=s' => \$body,

);

mailToContacts($smtp,$mail_from,$mail_user,$mail_pass,$mail_to,$subject,$body);

sub mailToContacts {

my ( $smtp, $mail_from, $user, $passwd, $mail_to, $subject, $msg ) = @_;

open my $DEBUG, "> /tmp/monitormail.log"

or die "Can't open the debug file:$!\n";

my $sender = new Mail::Sender {

ctype => 'text/plain; charset=utf-8',

encoding => 'utf-8',

smtp => $smtp,

from => $mail_from,

auth => 'LOGIN',

TLS_allowed => '0',

authid => $user,

authpwd => $passwd,

to => $mail_to,

subject => $subject,

debug => $DEBUG

};

$sender->MailMsg(

{ msg => $msg,

debug => $DEBUG

}

) or print $Mail::Sender::Error;

return 1;

}

# Do whatever you want here

exit 0;

5.4.检查配置

(1)检查ssh登录

[root@node4 ~]# masterha_check_ssh --conf=/etc/mha/app1.conf Thu Jan 19 20:31:38 2017 - [warning] Global configuration file /etc/masterha_default.cnf not found. Skipping. Thu Jan 19 20:31:38 2017 - [info] Reading application default configurations from /etc/mha/app1.conf.. Thu Jan 19 20:31:38 2017 - [info] Reading server configurations from /etc/mha/app1.conf.. Thu Jan 19 20:31:38 2017 - [info] Starting SSH connection tests.. Thu Jan 19 20:31:39 2017 - [debug] Thu Jan 19 20:31:38 2017 - [debug] Connecting via SSH from root@192.168.100.201(192.168.100.201:22) to root@192.168.100.202(192.168.100.202:22).. Thu Jan 19 20:31:39 2017 - [debug] ok. Thu Jan 19 20:31:39 2017 - [debug] Connecting via SSH from root@192.168.100.201(192.168.100.201:22) to root@192.168.100.203(192.168.100.203:22).. Thu Jan 19 20:31:39 2017 - [debug] ok. Thu Jan 19 20:31:40 2017 - [debug] Thu Jan 19 20:31:39 2017 - [debug] Connecting via SSH from root@192.168.100.202(192.168.100.202:22) to root@192.168.100.201(192.168.100.201:22).. Thu Jan 19 20:31:39 2017 - [debug] ok. Thu Jan 19 20:31:39 2017 - [debug] Connecting via SSH from root@192.168.100.202(192.168.100.202:22) to root@192.168.100.203(192.168.100.203:22).. Thu Jan 19 20:31:39 2017 - [debug] ok. Thu Jan 19 20:31:40 2017 - [debug] Thu Jan 19 20:31:39 2017 - [debug] Connecting via SSH from root@192.168.100.203(192.168.100.203:22) to root@192.168.100.201(192.168.100.201:22).. Thu Jan 19 20:31:40 2017 - [debug] ok. Thu Jan 19 20:31:40 2017 - [debug] Connecting via SSH from root@192.168.100.203(192.168.100.203:22) to root@192.168.100.202(192.168.100.202:22).. Thu Jan 19 20:31:40 2017 - [debug] ok. Thu Jan 19 20:31:40 2017 - [info] All SSH connection tests passed successfully.

(2)检查mysql复制是否配置成功

[root@node4 ~]# masterha_check_repl --conf=/etc/mha/app1.conf

Thu Jan 19 20:31:58 2017 - [warning] Global configuration file /etc/masterha_default.cnf not found. Skipping.

Thu Jan 19 20:31:58 2017 - [info] Reading application default configurations from /etc/mha/app1.conf..

Thu Jan 19 20:31:58 2017 - [info] Reading server configurations from /etc/mha/app1.conf..

Thu Jan 19 20:31:59 2017 - [info] MHA::MasterMonitor version 0.55.

Thu Jan 19 20:31:59 2017 - [info] Dead Servers:

Thu Jan 19 20:31:59 2017 - [info] Alive Servers:

Thu Jan 19 20:31:59 2017 - [info] 192.168.100.201(192.168.100.201:3306)

Thu Jan 19 20:31:59 2017 - [info] 192.168.100.202(192.168.100.202:3306)

Thu Jan 19 20:31:59 2017 - [info] 192.168.100.203(192.168.100.203:3306)

Thu Jan 19 20:31:59 2017 - [info] Alive Slaves:

Thu Jan 19 20:31:59 2017 - [info] 192.168.100.202(192.168.100.202:3306) Version=5.1.73-log (oldest major version between slaves) log-bin:enabled

Thu Jan 19 20:31:59 2017 - [info] Replicating from 192.168.100.201(192.168.100.201:3306)

Thu Jan 19 20:31:59 2017 - [info] Primary candidate for the new Master (candidate_master is set)

Thu Jan 19 20:31:59 2017 - [info] 192.168.100.203(192.168.100.203:3306) Version=5.1.73-log (oldest major version between slaves) log-bin:enabled

Thu Jan 19 20:31:59 2017 - [info] Replicating from 192.168.100.201(192.168.100.201:3306)

Thu Jan 19 20:31:59 2017 - [info] Not candidate for the new Master (no_master is set)

Thu Jan 19 20:31:59 2017 - [info] Current Alive Master: 192.168.100.201(192.168.100.201:3306)

Thu Jan 19 20:31:59 2017 - [info] Checking slave configurations..

Thu Jan 19 20:31:59 2017 - [info] read_only=1 is not set on slave 192.168.100.202(192.168.100.202:3306).

Thu Jan 19 20:31:59 2017 - [warning] relay_log_purge=0 is not set on slave 192.168.100.202(192.168.100.202:3306).

Thu Jan 19 20:31:59 2017 - [info] read_only=1 is not set on slave 192.168.100.203(192.168.100.203:3306).

Thu Jan 19 20:31:59 2017 - [warning] relay_log_purge=0 is not set on slave 192.168.100.203(192.168.100.203:3306).

Thu Jan 19 20:31:59 2017 - [info] Checking replication filtering settings..

Thu Jan 19 20:31:59 2017 - [info] binlog_do_db= , binlog_ignore_db=

Thu Jan 19 20:31:59 2017 - [info] Replication filtering check ok.

Thu Jan 19 20:31:59 2017 - [info] Starting SSH connection tests..

Thu Jan 19 20:32:05 2017 - [info] All SSH connection tests passed successfully.

Thu Jan 19 20:32:05 2017 - [info] Checking MHA Node version..

Thu Jan 19 20:32:11 2017 - [info] Version check ok.

Thu Jan 19 20:32:11 2017 - [info] Checking SSH publickey authentication settings on the current master..

Thu Jan 19 20:32:11 2017 - [info] HealthCheck: SSH to 192.168.100.201 is reachable.

Thu Jan 19 20:32:11 2017 - [info] Master MHA Node version is 0.54.

Thu Jan 19 20:32:11 2017 - [info] Checking recovery script configurations on the current master..

Thu Jan 19 20:32:11 2017 - [info] Executing command: save_binary_logs --command=test --start_pos=4 --binlog_dir=/var/lib/mysql --output_file=/var/log/mha/app1/save_binary_logs_test --manager_version=0.55 --start_file=mysql-logbin.000003

Thu Jan 19 20:32:11 2017 - [info] Connecting to root@192.168.100.201(192.168.100.201)..

Creating /var/log/mha/app1 if not exists.. ok.

Checking output directory is accessible or not..

ok.

Binlog found at /var/lib/mysql, up to mysql-logbin.000003

Thu Jan 19 20:32:11 2017 - [info] Master setting check done.

Thu Jan 19 20:32:11 2017 - [info] Checking SSH publickey authentication and checking recovery script configurations on all alive slave servers..

Thu Jan 19 20:32:12 2017 - [info] Executing command : apply_diff_relay_logs --command=test --slave_user='mha' --slave_host=192.168.100.202 --slave_ip=192.168.100.202 --slave_port=3306 --workdir=/var/log/mha/app1 --target_version=5.1.73-log --manager_version=0.55 --relay_log_info=/var/lib/mysql/relay-log.info --relay_dir=/var/lib/mysql/ --slave_pass=xxx

Thu Jan 19 20:32:12 2017 - [info] Connecting to root@192.168.100.202(192.168.100.202:22)..

Checking slave recovery environment settings..

Opening /var/lib/mysql/relay-log.info ... ok.

Relay log found at /var/lib/mysql, up to relay-bin.000002

Temporary relay log file is /var/lib/mysql/relay-bin.000002

Testing mysql connection and privileges.. done.

Testing mysqlbinlog output.. done.

Cleaning up test file(s).. done.

Thu Jan 19 20:32:12 2017 - [info] Executing command : apply_diff_relay_logs --command=test --slave_user='mha' --slave_host=192.168.100.203 --slave_ip=192.168.100.203 --slave_port=3306 --workdir=/var/log/mha/app1 --target_version=5.1.73-log --manager_version=0.55 --relay_log_info=/var/lib/mysql/relay-log.info --relay_dir=/var/lib/mysql/ --slave_pass=xxx

Thu Jan 19 20:32:12 2017 - [info] Connecting to root@192.168.100.203(192.168.100.203:22)..

Checking slave recovery environment settings..

Opening /var/lib/mysql/relay-log.info ... ok.

Relay log found at /var/lib/mysql, up to relay-bin.000002

Temporary relay log file is /var/lib/mysql/relay-bin.000002

Testing mysql connection and privileges.. done.

Testing mysqlbinlog output.. done.

Cleaning up test file(s).. done.

Thu Jan 19 20:32:12 2017 - [info] Slaves settings check done.

Thu Jan 19 20:32:12 2017 - [info]

192.168.100.201 (current master)

+--192.168.100.202

+--192.168.100.203

Thu Jan 19 20:32:12 2017 - [info] Checking replication health on 192.168.100.202..

Thu Jan 19 20:32:12 2017 - [info] ok.

Thu Jan 19 20:32:12 2017 - [info] Checking replication health on 192.168.100.203..

Thu Jan 19 20:32:12 2017 - [info] ok.

Thu Jan 19 20:32:12 2017 - [info] Checking master_ip_failover_script status:

Thu Jan 19 20:32:12 2017 - [info] /data/perl/master_ip_failover --command=status --ssh_user=root --orig_master_host=192.168.100.201 --orig_master_ip=192.168.100.201 --orig_master_port=3306

IN SCRIPT TEST====/sbin/ifconfig eth0:1 down==/sbin/ifconfig eth0:1 192.168.100.201/24===

Checking the Status of the script.. OK

ssh: Could not resolve hostname cluster1: Name or service not known

Thu Jan 19 20:32:12 2017 - [info] OK.

Thu Jan 19 20:32:12 2017 - [warning] shutdown_script is not defined.

Thu Jan 19 20:32:12 2017 - [info] Got exit code 0 (Not master dead).

MySQL Replication Health is OK.

5.5.启动监控

启动命令

[root@node4 ~]# nohup masterha_manager --conf=/etc/mha/app1.conf --remove_dead_master_conf --ignore_last_failover < /dev/null > /var/log/mha/app1/manager.log 2>&1 & [1] 50429

验证是否启动成功

[root@node4 ~]# masterha_check_status --conf=/etc/mha/app1.cnf app1 (pid:50429) is running(0:PING_OK), master:192.168.100.201

6.测试

(1)手动关闭主库,模拟主库当机

[root@node1 ~]# /etc/init.d/mysqld stop

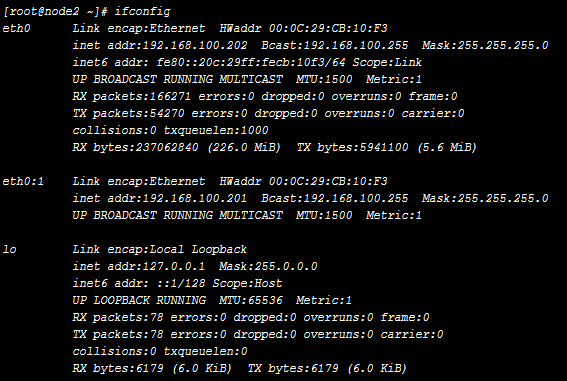

(2)验证虚拟IP已经在node2节点启动

(3)验证node3从节点,已经设置master服务器地址为node2节点。

(4)验证邮件已经发出

如果上面的检查项,都没有问题,那么MHA就是配置正常,限于篇幅问题,更多内容,需要你自己去探索。